Overview

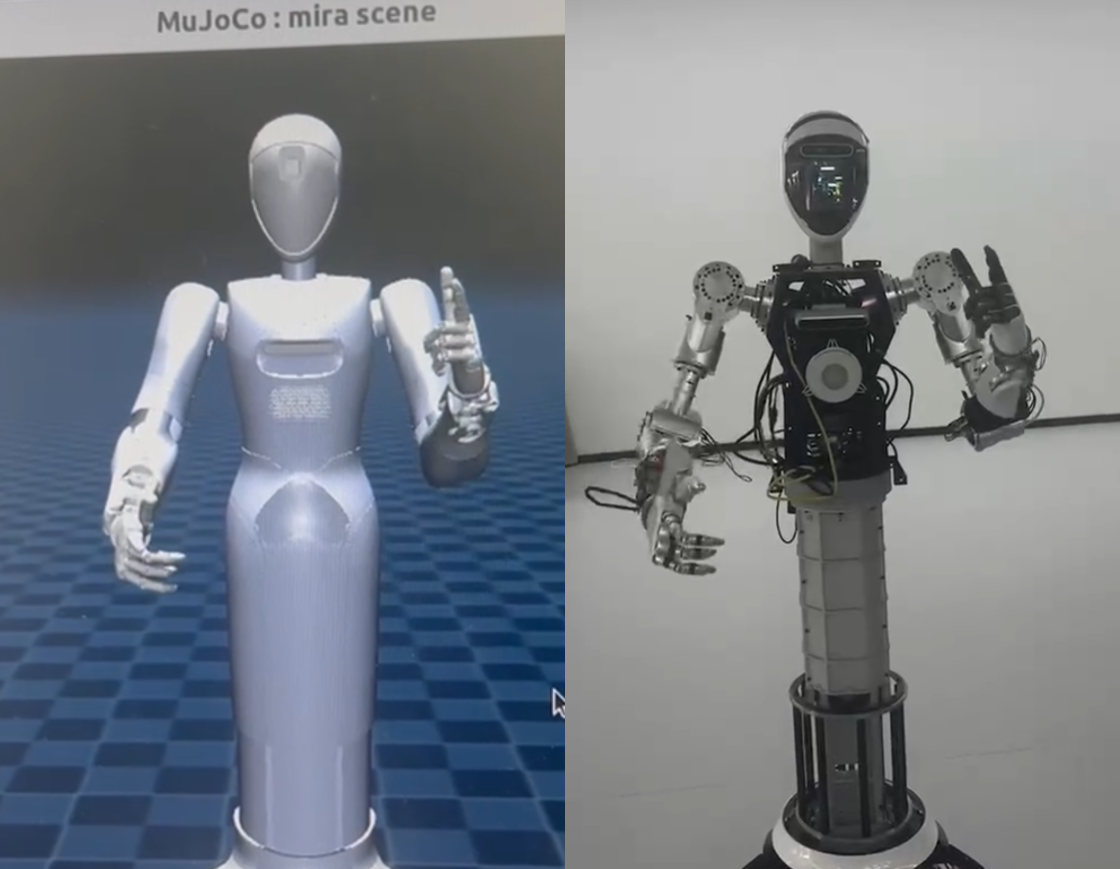

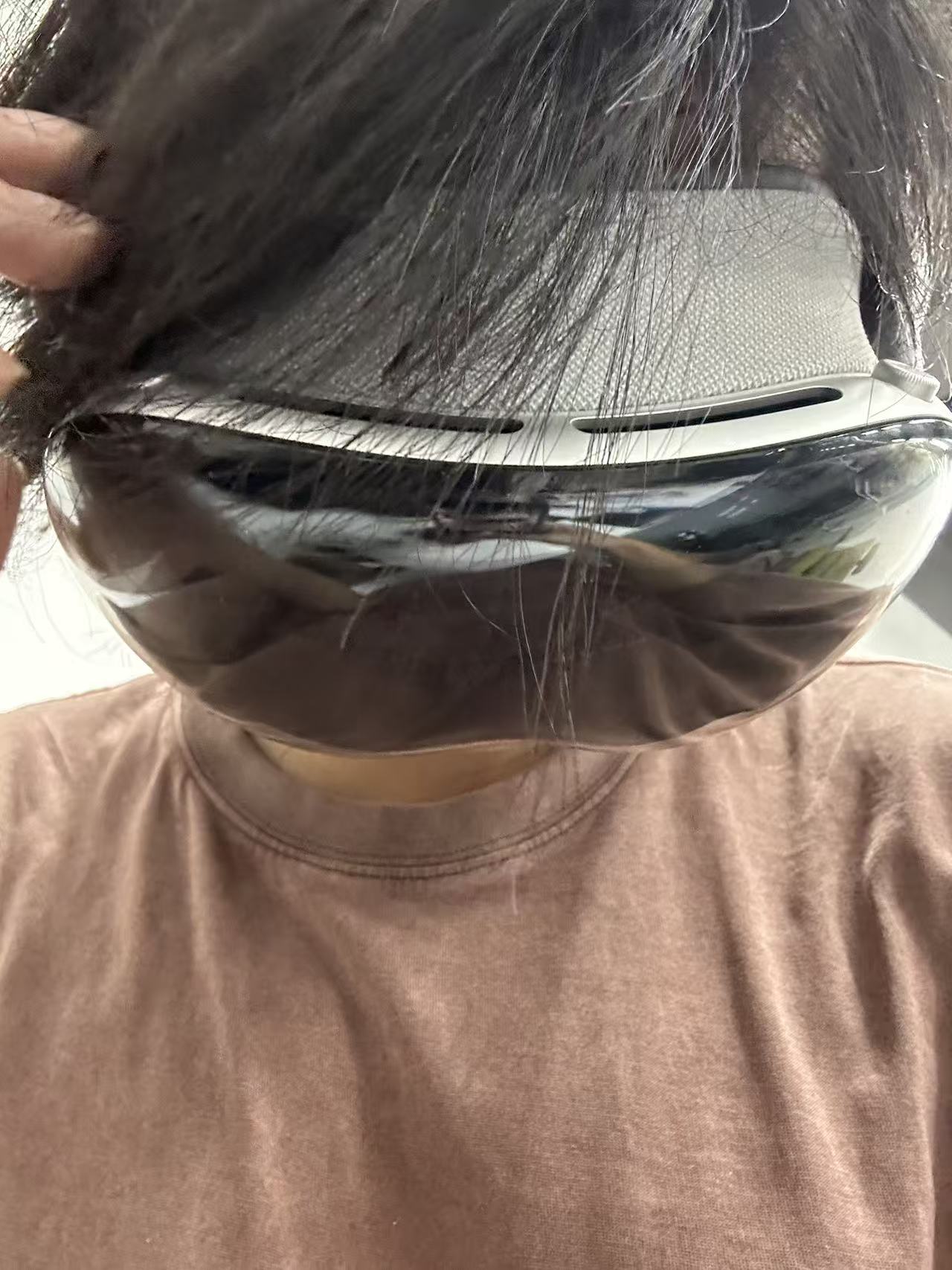

This project introduces a mixed-reality teleoperation system for humanoid robots using the Apple Vision Pro. The system translates natural human gestures into precise robotic movements in real-time, enabling intuitive control without the need for complex controllers or extensive training.

Approach

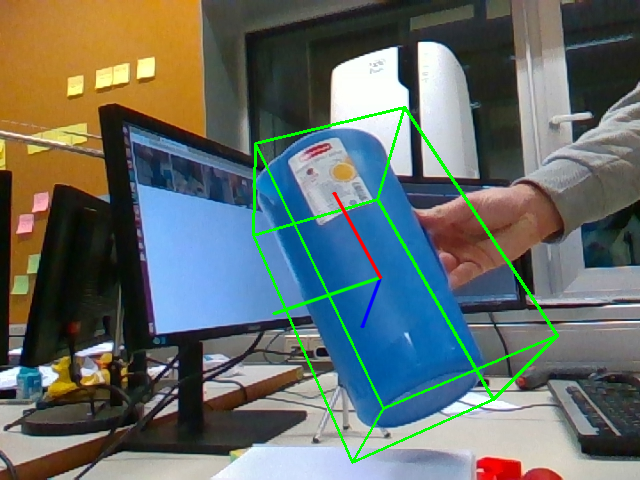

We implemented a real-time inverse kinematics solver that maps human hand and arm movements to the robot's high-DOF kinematic chain. The system incorporates depth-based collision avoidance to prevent unintended contacts and provides haptic feedback to the user, creating a closed-loop control system.

Deliverables

The final outcome is a fully functional teleoperation suite compatible with ROS2-based humanoid robots. It includes a Unity-based VR interface, a Python backend for kinematic solving, and a low-latency communication bridge.