Overview

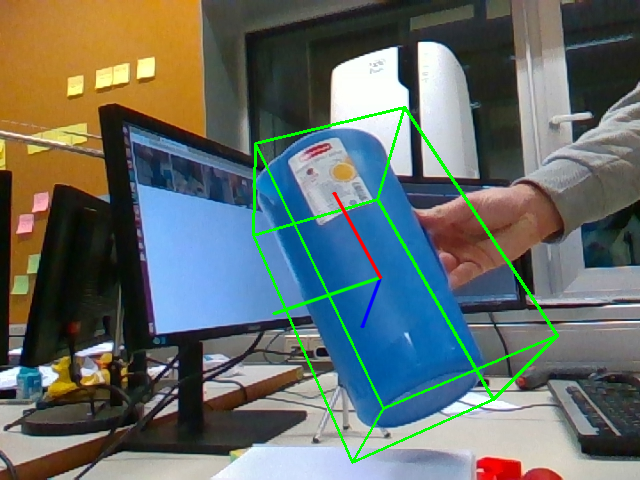

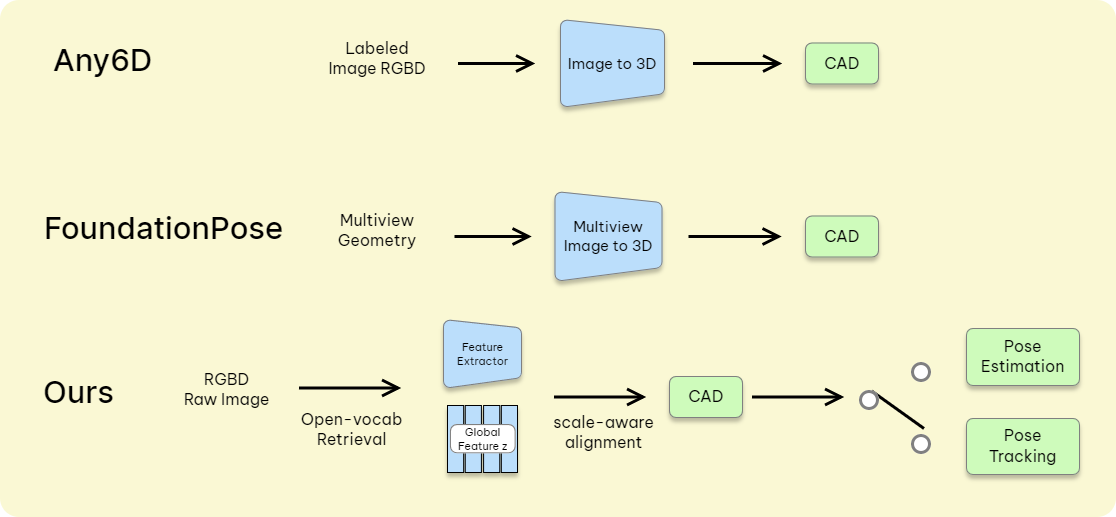

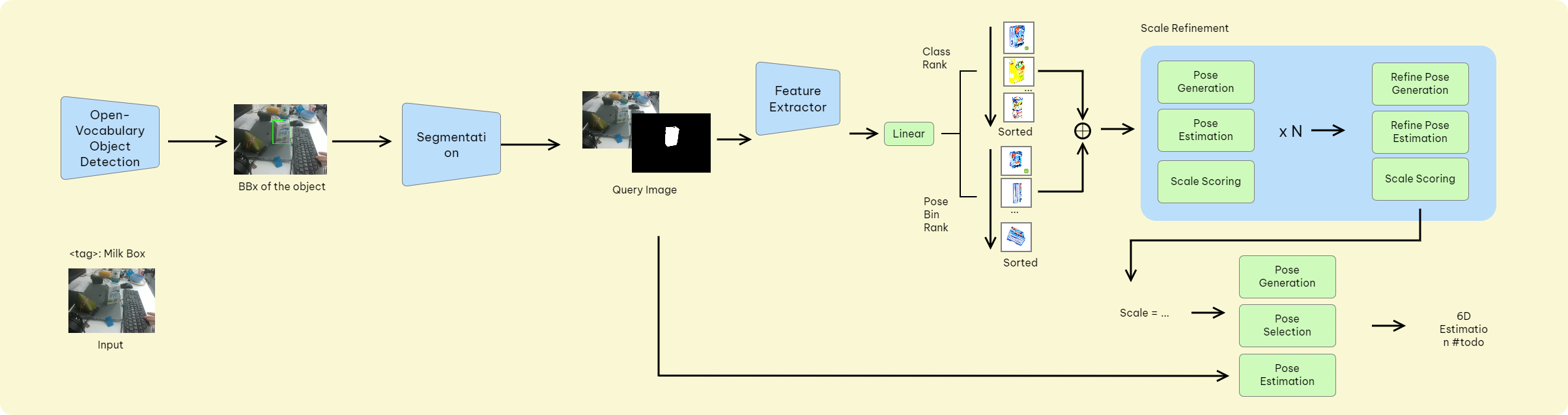

This research addresses the challenge of enabling robots to manipulate novel objects without extensive retraining. We developed a one-shot 6D pose estimation pipeline that allows a robot to understand and track an object's position and orientation in 3D space given only a single reference image or demonstration.

Approach

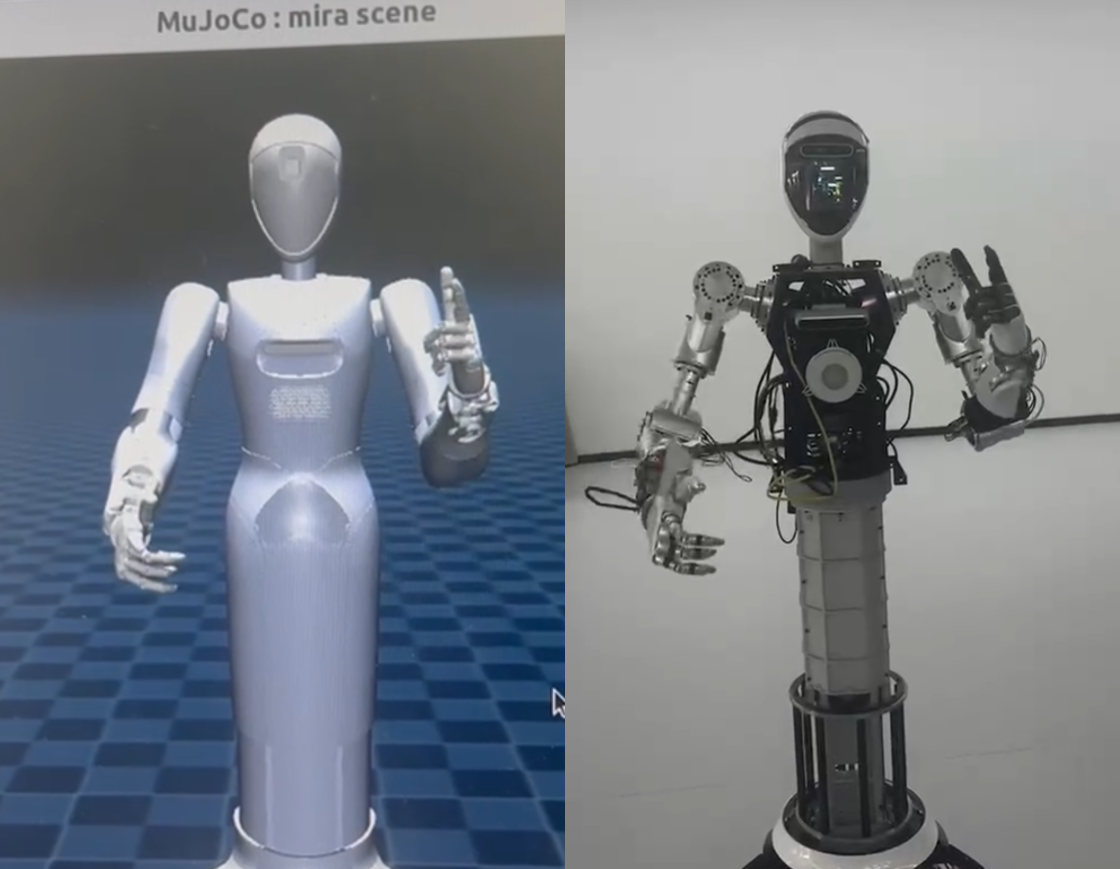

Our approach leverages synthetic data generation to bridge the gap between limited real-world samples and deep learning requirements. We built a procedural generation pipeline in Blender to create large-scale synthetic training datasets from single object scans. For real-time tracking, we integrated a Kalman filter-based refinement step to smooth out jitter and handle temporary occlusions.

Deliverables

The project resulted in a robust 6D pose estimation pipeline, a synthetic data generation tool for Blender,and a 1300 object dataset for training and evaluation.